▬▬▬▬▬▬▬▬▬▬▬▬▬▬ஜ۩۞۩ஜ▬▬▬▬▬▬▬▬▬▬▬▬▬▬

In an IBM Cognos TM1 server, by default numeric values are stored as 0. When the cell content is retrieved either in TI or through rules, a value of zero is returned. This means TM1 does NOT differentiate between missing data (null value) and zero.

In other words, you will not be able to differentiate the cells for

which there does not exist data, with the ones where data is 0.

To work with situations where we need to differentiate between missing data and zero, we can use UNDEFVALS in the rule of an IBM Cognos TM1 cube. If UNDEFVALS is used then the default value is changed from 0 to a special undefined value.

Let’s create a simple 3 dimensional cube available from Planing Sample database, namely:

- plan_version

- plan_business_unit

- plan_department

We will be working with integer data alone. The cube looks like this, without any rules;

Let us go ahead and add this as the 1st line of the rules file and save the rules. Notice the view

All

the Zeroes have vanished and instead you will see blanks. At the

intersection of UK and Direct, key a value of 0, turn on zero

suppression and recalculate the view. It will appear like this:

It means that, zero suppression will actually turn off the special undefined value,

instead of zeroes. It is a side effect of using UNDEFVALS in the rule

definition. This unintended consequence is visible in Turbo Integrator

(TI) as well.

If you were

to save this view and use it as source in TI (see screen for the view

spec), then perform an AsciiOutput of the data records, you will get

zeroes as part of your data.

How to reset data in a cube with UNDEFVALS?

In a cube without

UNDEFVALS, if there is a value in an intersection of a cube and you hit

delete, then the intersection value is reverted to zero. Let us

consider ways to accomplish this in a cube with UNDEFVALS.

Manual Option

This is applicable only if you have limited cells to work with. As mentioned in this IBM KB,

by inputting a value of 4.94066e-324 in the intersections. To

illustrate this, look at the screenshot below. The LHS of the picture

shows where a value of zero was present. And on the RHS by inputting

the aforementioned value and hitting recalc, the zeroes goes away.

Turbo Integrator (TI) Option

- CubeClearData – This will reset all the non-rule driven intersections to the undefined/null value

- Sometimes however you may want to reset only part of cube and not the entire cube. Our instinct is to use ViewZeroOut. However ViewZeroOut on an UNDEFVALS cube will only ZERO out the intersections, it will NOT reset the intersections to undefined/null value

- In such situations, you can use CellPutN a value of 4.94066e-324 in those intersections and reset the data to undefined/null value

- Alternately you can use new function in 10.2 UndefinedCellValue for this case (valid in both rules and TI). This function returns either 0 (on a cube without Undefvals declaration) or special undefined value (on a cube with Undefvals declaration) – see below snippet

vx_UndefValue = UndefinedCellValue (vs_CubeName);

CellPutN (vx_UndefValue, vs_CubeName, ‘FY 2003 Budget’, ‘UK’, ‘Finance’);

10.2 Reference Guide documentation incorrectly states that cube name is an optional parameter; APAR PI50000 is raised to correct it. If the cube name is not supplied then the function returns 0.

How to check for an undefined/null value in cube?

Foremost

of all, to know which cubes have UNDEFVALS declaration, you can loop

through the }Cubes dimension and pass it to UndefinedCellValue function;

an AsciiOutput of this call will list such cubes. This is possible

only in 10.2.

Well,

let’s suppose you do have a cube with UNDEFVALS. When you want to work

with such cubes, you’d like to know whether or not, given intersection

has undefined/null value in it. To do that you can utilize the ISUNDEFINEDCELLVALUE function (valid in both rules and TI)

IsUndefinedCellValue

function compares the passed value to the cube’s default view and

returns 1 if true; otherwise it returns 0. Now let’s go ahead and add

below rule line

Undefvals;

[‘Germany’] = N:

IF ( IsUndefinedCellValue ( [‘UK’] ) = 1

, UndefinedCellValue

, 88

);

I am trying

assign a value of 88 for all cells of Germany, where corresponding

cells of UK have a valid value (including 0). With some sample values

assigned, a view of the Cognos TM1 cube with UNDEFVALS will look like

below (please note Marketing and Engineering are NOT the children of

Sales, they are children of Total Org):

Let’s now add Skipcheck and Feeders to the rules file to see its impact with zero suppression on the cube view:

SKIPCHECK;FEEDERS;

Undefvals;

[‘Germany’] = N:

IF ( IsUndefinedCellValue ( [‘UK’] ) = 1

, UndefinedCellValue

, 88

);

[‘UK’] => [‘Germany’];

Notice that a value of

zero (UK, IT) is able to FEED the (Germany, IT) intersection. This is

one of the impact of using UNDEFVALS in the cube.

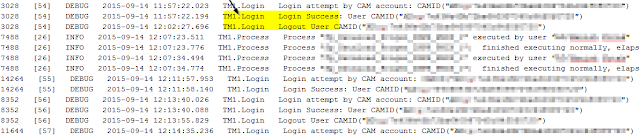

If you want to find out

the actual value of the special undefined value that IBM Cognos TM1

server assigns to your cube, then you can write a small TI snippet to

find that out. See screenshot below: